publications

2026

-

Synthesizing scientific literature with retrieval-augmented language modelsAkari Asai, Jacqueline He, Rulin Shao, Weijia Shi, Amanpreet Singh, Joseph Chee Chang, Kyle Lo, and 21 more authorsNature, Feb 2026AI for Science Domain Adaptation, Specialization, Generalization Retrieval-Augmented LMs Training Language Models

Synthesizing scientific literature with retrieval-augmented language modelsAkari Asai, Jacqueline He, Rulin Shao, Weijia Shi, Amanpreet Singh, Joseph Chee Chang, Kyle Lo, and 21 more authorsNature, Feb 2026AI for Science Domain Adaptation, Specialization, Generalization Retrieval-Augmented LMs Training Language ModelsScientific progress depends on researchers’ ability to synthesize the growing body of literature. Can large language models (LMs) assist scientists in this task? We introduce OpenScholar, a specialized retrieval-augmented LM that answers scientific queries by identifying relevant passages from 45 million open-access papers and synthesizing citation-backed responses. To evaluate OpenScholar, we develop ScholarQABench, the first large-scale multi-domain benchmark for literature search, comprising 2,967 expert-written queries and 208 long-form answers across computer science, physics, neuroscience, and biomedicine. On ScholarQABench, OpenScholar-8B outperforms GPT-4o by 5% and PaperQA2 by 7% in correctness, despite being a smaller, open model. While GPT4o hallucinates citations 78 to 90% of the time, OpenScholar achieves citation accuracy on par with human experts. OpenScholar’s datastore, retriever, and self-feedback inference loop also improves off-the-shelf LMs: for instance, OpenScholar-GPT4o improves GPT-4o’s correctness by 12%. In human evaluations, experts preferred OpenScholar-8B and OpenScholar-GPT4o responses over expert-written ones 51% and 70% of the time, respectively, compared to GPT4o’s 32%. We open-source all of our code, models, datastore, data and a public demo.

@article{Asai2026OpenScholar, author = {Asai, Akari and He, Jacqueline and Shao, Rulin and Shi, Weijia and Singh, Amanpreet and Chang, Joseph Chee and Lo, Kyle and Soldaini, Luca and Feldman, Sergey and D'Arcy, Mike and Wadden, David and Latzke, Matt and Sparks, Jenna and Hwang, Jena D and Kishore, Varsha and Tian, Minyang and Ji, Pan and Liu, Shengyan and Tong, Hao and Wu, Bohao and Xiong, Yanyu and Zettlemoyer, Luke and Neubig, Graham and Weld, Daniel S and Downey, Doug and Yih, Wen-tau and Koh, Pang Wei and Hajishirzi, Hannaneh}, doi = {10.1038/s41586-025-10072-4}, journal = {Nature}, month = feb, title = {Synthesizing scientific literature with retrieval-augmented language models}, url = {https://www.nature.com/articles/s41586-025-10072-4}, volume = {650}, year = {2026} } -

A Human-Centric Framework for Data Attribution in Large Language ModelsAmelie Wührl, Mattes Ruckdeschel, Kyle Lo, and Anna RogersArXiv, Feb 2026

A Human-Centric Framework for Data Attribution in Large Language ModelsAmelie Wührl, Mattes Ruckdeschel, Kyle Lo, and Anna RogersArXiv, Feb 2026In the current Large Language Model (LLM) ecosystem, creators have little agency over how their data is used, and LLM users may find themselves unknowingly plagiarizing existing sources. Attribution of LLM-generated text to LLM input data could help with these challenges, but so far we have more questions than answers: what elements of LLM outputs require attribution, what goals should it serve, how should it be implemented? We contribute a human-centric data attribution framework, which situates the attribution problem within the broader data economy. Specific use cases for attribution, such as creative writing assistance or fact-checking, can be specified via a set of parameters (including stakeholder objectives and implementation criteria). These criteria are up for negotiation by the relevant stakeholder groups: creators, LLM users, and their intermediaries (publishers, platforms, AI companies). The outcome of domain-specific negotiations can be implemented and tested for whether the stakeholder goals are achieved. The proposed approach provides a bridge between methodological NLP work on data attribution, governance work on policy interventions, and economic analysis of creator incentives for a sustainable equilibrium in the data economy.

@article{Whrl2026HumancentricFramework, author = {Wührl, Amelie and Ruckdeschel, Mattes and Lo, Kyle and Rogers, Anna}, journal = {ArXiv}, month = feb, title = {A Human-Centric Framework for Data Attribution in Large Language Models}, url = {https://arxiv.org/abs/2602.10995}, volume = {2602.10995}, year = {2026} } -

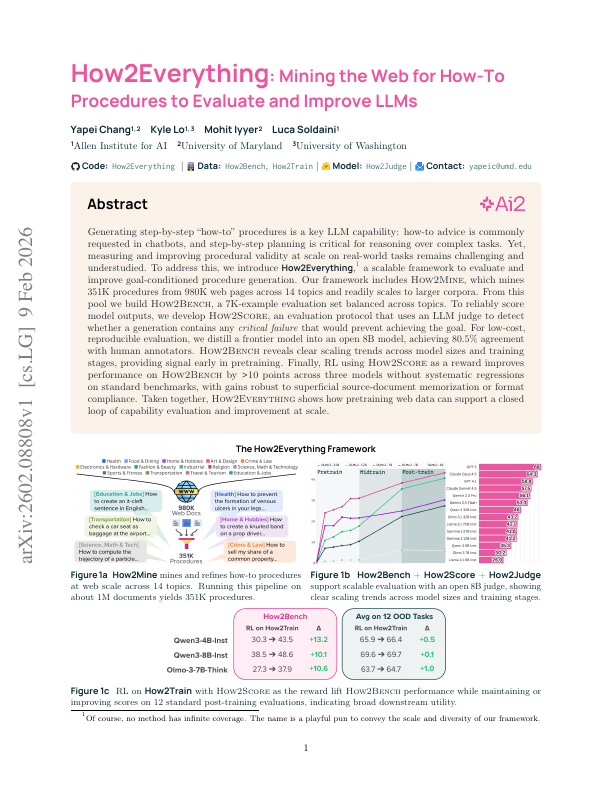

How2Everything: Mining the Web for How-To Procedures to Evaluate and Improve LLMsYapei Chang, Kyle Lo, Mohit Iyyer, and Luca SoldainiArXiv, Feb 2026

How2Everything: Mining the Web for How-To Procedures to Evaluate and Improve LLMsYapei Chang, Kyle Lo, Mohit Iyyer, and Luca SoldainiArXiv, Feb 2026Generating step-by-step "how-to" procedures is a key LLM capability: how-to advice is commonly requested in chatbots, and step-by-step planning is critical for reasoning over complex tasks. Yet, measuring and improving procedural validity at scale on real-world tasks remains challenging and understudied. To address this, we introduce How2Everything, a scalable framework to evaluate and improve goal-conditioned procedure generation. Our framework includes How2Mine, which mines 351K procedures from 980K web pages across 14 topics and readily scales to larger corpora. From this pool we build How2Bench, a 7K-example evaluation set balanced across topics. To reliably score model outputs, we develop How2Score, an evaluation protocol that uses an LLM judge to detect whether a generation contains any critical failure that would prevent achieving the goal. For low-cost, reproducible evaluation, we distill a frontier model into an open 8B model, achieving 80.5% agreement with human annotators. How2Bench reveals clear scaling trends across model sizes and training stages, providing signal early in pretraining. Finally, RL using How2Score as a reward improves performance on How2Bench by >10 points across three models without systematic regressions on standard benchmarks, with gains robust to superficial source-document memorization or format compliance. Taken together, How2Everything shows how pretraining web data can support a closed loop of capability evaluation and improvement at scale.

@article{Chang2026How2everythingMining, author = {Chang, Yapei and Lo, Kyle and Iyyer, Mohit and Soldaini, Luca}, journal = {ArXiv}, month = feb, title = {How2Everything: Mining the Web for How-To Procedures to Evaluate and Improve LLMs}, url = {https://arxiv.org/abs/2602.08808}, volume = {2602.08808}, year = {2026} } -

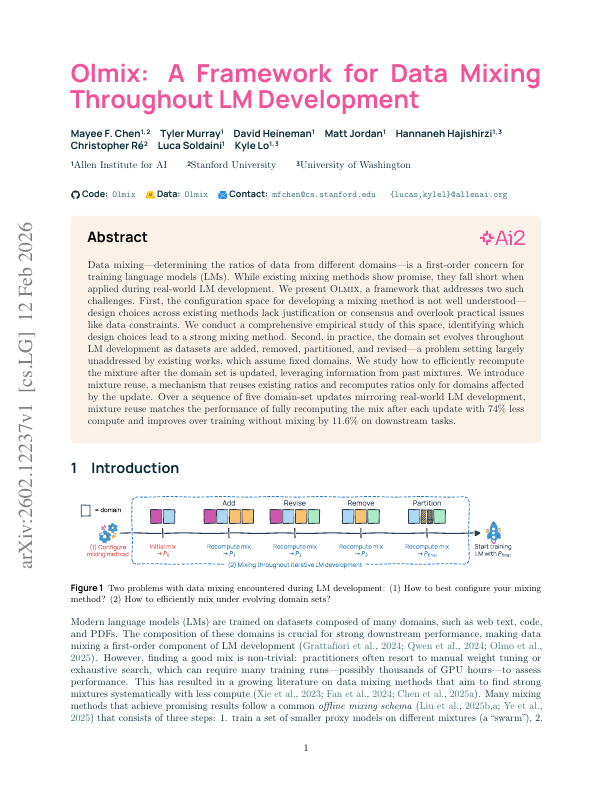

Olmix: A Framework for Data Mixing Throughout LM DevelopmentMayee F Chen, Tyler Murray, David Heineman, Matt Jordan, Hannaneh Hajishirzi, Christopher Ré, Luca Soldaini, and 1 more authorArXiv, Feb 2026

Olmix: A Framework for Data Mixing Throughout LM DevelopmentMayee F Chen, Tyler Murray, David Heineman, Matt Jordan, Hannaneh Hajishirzi, Christopher Ré, Luca Soldaini, and 1 more authorArXiv, Feb 2026Data mixing – determining the ratios of data from different domains – is a first-order concern for training language models (LMs). While existing mixing methods show promise, they fall short when applied during real-world LM development. We present Olmix, a framework that addresses two such challenges. First, the configuration space for developing a mixing method is not well understood – design choices across existing methods lack justification or consensus and overlook practical issues like data constraints. We conduct a comprehensive empirical study of this space, identifying which design choices lead to a strong mixing method. Second, in practice, the domain set evolves throughout LM development as datasets are added, removed, partitioned, and revised – a problem setting largely unaddressed by existing works, which assume fixed domains. We study how to efficiently recompute the mixture after the domain set is updated, leveraging information from past mixtures. We introduce mixture reuse, a mechanism that reuses existing ratios and recomputes ratios only for domains affected by the update. Over a sequence of five domain-set updates mirroring real-world LM development, mixture reuse matches the performance of fully recomputing the mix after each update with 74% less compute and improves over training without mixing by 11.6% on downstream tasks.

@article{Chen2026OlmixFramework, author = {Chen, Mayee F and Murray, Tyler and Heineman, David and Jordan, Matt and Hajishirzi, Hannaneh and Ré, Christopher and Soldaini, Luca and Lo, Kyle}, journal = {ArXiv}, month = feb, title = {Olmix: A Framework for Data Mixing Throughout LM Development}, url = {https://arxiv.org/abs/2602.12237}, volume = {2602.12237}, year = {2026} }

2025

-

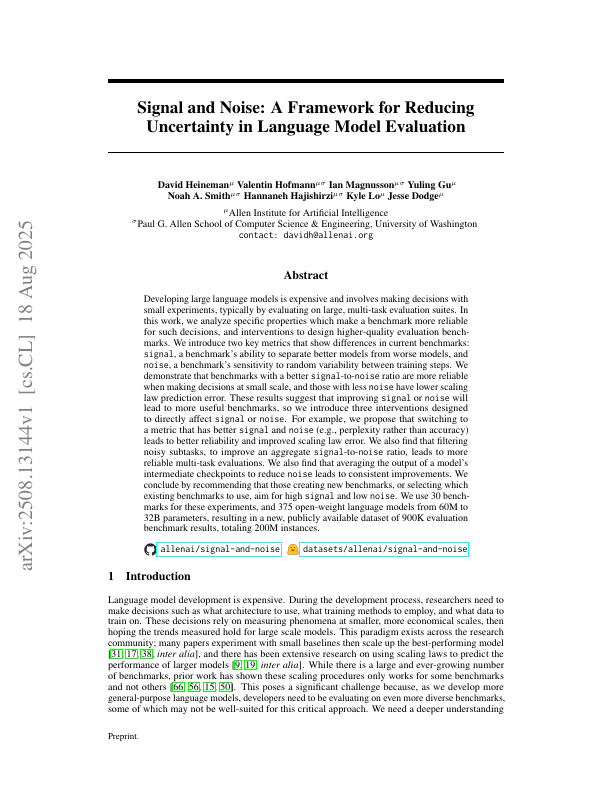

Signal and noise: A framework for reducing uncertainty in language model evaluationDavid Heineman, Valentin Hofmann, Ian Magnusson, Yuling Gu, Noah A Smith, Hannaneh Hajishirzi, Kyle Lo, and 1 more authorIn NeurIPS (Datasets and Benchmarks), Dec 2025

Signal and noise: A framework for reducing uncertainty in language model evaluationDavid Heineman, Valentin Hofmann, Ian Magnusson, Yuling Gu, Noah A Smith, Hannaneh Hajishirzi, Kyle Lo, and 1 more authorIn NeurIPS (Datasets and Benchmarks), Dec 2025Developing large language models is expensive and involves making decisions with small experiments, typically by evaluating on large, multi-task evaluation suites. In this work, we analyze specific properties which make a benchmark more reliable for such decisions, and interventions to design higher-quality evaluation benchmarks. We introduce two key metrics that show differences in current benchmarks: signal, a benchmark’s ability to separate better models from worse models, and noise, a benchmark’s sensitivity to random variability between training steps. We demonstrate that benchmarks with a better signal-to-noise ratio are more reliable when making decisions at small scale, and those with less noise have lower scaling law prediction error. These results suggest that improving signal or noise will lead to more useful benchmarks, so we introduce three interventions designed to directly affect signal or noise. For example, we propose that switching to a metric that has better signal and noise (e.g., perplexity rather than accuracy) leads to better reliability and improved scaling law error. We also find that filtering noisy subtasks, to improve an aggregate signal-to-noise ratio, leads to more reliable multi-task evaluations. We also find that averaging the output of a model’s intermediate checkpoints to reduce noise leads to consistent improvements. We conclude by recommending that those creating new benchmarks, or selecting which existing benchmarks to use, aim for high signal and low noise. We use 30 benchmarks for these experiments, and 375 open-weight language models from 60M to 32B parameters, resulting in a new, publicly available dataset of 900K evaluation benchmark results, totaling 200M instances.

@inproceedings{Heineman2025SignalAndNoise, author = {Heineman, David and Hofmann, Valentin and Magnusson, Ian and Gu, Yuling and Smith, Noah A and Hajishirzi, Hannaneh and Lo, Kyle and Dodge, Jesse}, booktitle = {NeurIPS (Datasets and Benchmarks)}, month = dec, title = {Signal and noise: A framework for reducing uncertainty in language model evaluation}, url = {https://arxiv.org/abs/2508.13144}, year = {2025} } -

FlexOlmo: Open Language Models for Flexible Data UseWeijia Shi, Akshita Bhagia, Kevin Farhat, Niklas Muennighoff, Pete Walsh, Jacob Morrison, Dustin Schwenk, and 16 more authorsIn NeurIPS, Dec 2025

FlexOlmo: Open Language Models for Flexible Data UseWeijia Shi, Akshita Bhagia, Kevin Farhat, Niklas Muennighoff, Pete Walsh, Jacob Morrison, Dustin Schwenk, and 16 more authorsIn NeurIPS, Dec 2025We introduce FlexOlmo, a new class of language models (LMs) that supports (1) distributed training without data sharing, where different model parameters are independently trained on closed datasets, and (2) data-flexible inference, where these parameters along with their associated data can be flexibly included or excluded from model inferences with no further training. FlexOlmo employs a mixture-of-experts (MoE) architecture where each expert is trained independently on closed datasets and later integrated through a new domain-informed routing without any joint training. FlexOlmo is trained on FlexMix, a corpus we curate comprising publicly available datasets alongside seven domain-specific sets, representing realistic approximations of closed sets. We evaluate models with up to 37 billion parameters (20 billion active) on 31 diverse downstream tasks. We show that a general expert trained on public data can be effectively combined with independently trained experts from other data owners, leading to an average 41% relative improvement while allowing users to opt out of certain data based on data licensing or permission requirements. Our approach also outperforms prior model merging methods by 10.1% on average and surpasses the standard MoE trained without data restrictions using the same training FLOPs. Altogether, this research presents a solution for both data owners and researchers in regulated industries with sensitive or protected data. FlexOlmo enables benefiting from closed data while respecting data owners’ preferences by keeping their data local and supporting fine-grained control of data access during inference.

@inproceedings{Shi2025FlexolmoOpenLanguage, author = {Shi, Weijia and Bhagia, Akshita and Farhat, Kevin and Muennighoff, Niklas and Walsh, Pete and Morrison, Jacob and Schwenk, Dustin and Longpre, Shayne and Poznanski, Jake and Ettinger, Allyson and Liu, Daogao and Li, Margaret and Groeneveld, Dirk and Lewis, Mike and Yih, Wen-tau and Soldaini, Luca and Lo, Kyle and Smith, Noah A and Zettlemoyer, Luke and Koh, Pang Wei and Hajishirzi, Hannaneh and Farhadi, Ali and Min, Sewon}, booktitle = {NeurIPS}, month = dec, title = {FlexOlmo: Open Language Models for Flexible Data Use}, url = {https://openreview.net/forum?id=1rUj9ZN6Bz}, year = {2025} } -

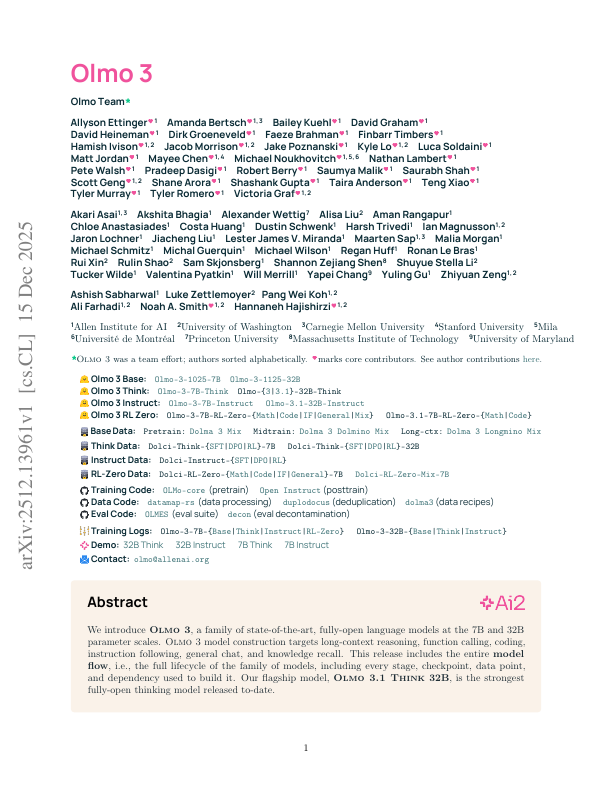

Olmo 3Team Olmo, Allyson Ettinger, Amanda Bertsch, Bailey Kuehl, David Graham, David Heineman, Dirk Groeneveld, and 61 more authorsArXiv, Dec 2025

Olmo 3Team Olmo, Allyson Ettinger, Amanda Bertsch, Bailey Kuehl, David Graham, David Heineman, Dirk Groeneveld, and 61 more authorsArXiv, Dec 2025We introduce Olmo 3, a family of state-of-the-art, fully-open language models at the 7B and 32B parameter scales. Olmo 3 model construction targets long-context reasoning, function calling, coding, instruction following, general chat, and knowledge recall. This release includes the entire model flow, i.e., the full lifecycle of the family of models, including every stage, checkpoint, data point, and dependency used to build it. Our flagship model, Olmo 3 Think 32B, is the strongest fully-open thinking model released to-date.

@article{Olmo2025Olmo3, author = {Olmo, Team and Ettinger, Allyson and Bertsch, Amanda and Kuehl, Bailey and Graham, David and Heineman, David and Groeneveld, Dirk and Brahman, Faeze and Timbers, Finbarr and Ivison, Hamish and Morrison, Jacob and Poznanski, Jake and Lo, Kyle and Soldaini, Luca and Jordan, Matt and Chen, Mayee and Noukhovitch, Michael and Lambert, Nathan and Walsh, Pete and Dasigi, Pradeep and Berry, Robert and Malik, Saumya and Shah, Saurabh and Geng, Scott and Arora, Shane and Gupta, Shashank and Anderson, Taira and Xiao, Teng and Murray, Tyler and Romero, Tyler and Graf, Victoria and Asai, Akari and Bhagia, Akshita and Wettig, Alexander and Liu, Alisa and Rangapur, Aman and Anastasiades, Chloe and Huang, Costa and Schwenk, Dustin and Trivedi, Harsh and Magnusson, Ian and Lochner, Jaron and Liu, Jiacheng and Miranda, Lester James V and Sap, Maarten and Morgan, Malia and Schmitz, Michael and Guerquin, Michal and Wilson, Michael and Huff, Regan and Bras, Ronan Le and Xin, Rui and Shao, Rulin and Skjonsberg, Sam and Shen, Shannon Zejiang and Li, Shuyue Stella and Wilde, Tucker and Pyatkin, Valentina and Merrill, Will and Chang, Yapei and Gu, Yuling and Zeng, Zhiyuan and Sabharwal, Ashish and Zettlemoyer, Luke and Koh, Pang Wei and Farhadi, Ali and Smith, Noah A and Hajishirzi, Hannaneh}, journal = {ArXiv}, month = dec, cv_authors_after = {Team Olmo}, cv_authors_before = {Team Olmo}, title = {Olmo 3}, url = {https://arxiv.org/abs/2512.13961}, volume = {2512.13961}, year = {2025} } -

Fluid language model benchmarkingValentin Hofmann, David Heineman, Ian Magnusson, Kyle Lo, Jesse Dodge, Maarten Sap, Pang Wei Koh, and 3 more authorsIn COLM, Oct 2025

Fluid language model benchmarkingValentin Hofmann, David Heineman, Ian Magnusson, Kyle Lo, Jesse Dodge, Maarten Sap, Pang Wei Koh, and 3 more authorsIn COLM, Oct 2025Language model (LM) benchmarking faces several challenges: comprehensive evaluations are costly, benchmarks often fail to measure the intended capabilities, and evaluation quality can degrade due to labeling errors and benchmark saturation. Although various strategies have been proposed to mitigate these issues, they tend to address individual aspects in isolation, neglecting broader questions about overall evaluation quality. Here, we introduce Fluid Benchmarking, a new evaluation approach that advances LM benchmarking across multiple dimensions. Inspired by psychometrics, Fluid Benchmarking is based on the insight that the relative value of benchmark items depends on an LM’s capability level, suggesting that evaluation should adapt to each LM. Methodologically, Fluid Benchmarking estimates an item response model based on existing LM evaluation results and uses the inferred quantities to select evaluation items dynamically, similar to computerized adaptive testing in education. In our experiments, we compare Fluid Benchmarking against the common practice of random item sampling as well as more sophisticated baselines, including alternative methods grounded in item response theory. We examine four dimensions – efficiency, validity, variance, and saturation – and find that Fluid Benchmarking achieves superior performance in all of them (e.g., higher validity and less variance on MMLU with fifty times fewer items). Our analysis shows that the two components of Fluid Benchmarking have distinct effects: item response theory, used to map performance into a latent ability space, increases validity, while dynamic item selection reduces variance. Overall, our results suggest that LM benchmarking can be substantially improved by moving beyond static evaluation.

@inproceedings{Hofmann2025FluidLanguageModel, author = {Hofmann, Valentin and Heineman, David and Magnusson, Ian and Lo, Kyle and Dodge, Jesse and Sap, Maarten and Koh, Pang Wei and Wang, Chun and Hajishirzi, Hannaneh and Smith, Noah A}, booktitle = {COLM}, month = oct, title = {Fluid language model benchmarking}, url = {https://arxiv.org/abs/2509.11106}, year = {2025} } -

LLMs as Research Tools: A Large Scale Survey of Researchers’ Usage and PerceptionsZhehui Liao, Maria Antoniak, Inyoung Cheong, Evie Yu-Yen Cheng, Ai-Heng Lee, Kyle Lo, Joseph Chee Chang, and 1 more authorIn COLM, Oct 2025

LLMs as Research Tools: A Large Scale Survey of Researchers’ Usage and PerceptionsZhehui Liao, Maria Antoniak, Inyoung Cheong, Evie Yu-Yen Cheng, Ai-Heng Lee, Kyle Lo, Joseph Chee Chang, and 1 more authorIn COLM, Oct 2025The rise of large language models (LLMs) has led many researchers to consider their usage for scientific work. Some have found benefits using LLMs to augment or automate aspects of their research pipeline, while others have urged caution due to risks and ethical concerns. Yet little work has sought to quantify and characterize how researchers use LLMs and why. We present the first large-scale survey of 816 verified research article authors to understand how the research community leverages and perceives LLMs as research tools. We examine participants’ self-reported LLM usage, finding that 81% of researchers have already incorporated LLMs into different aspects of their research workflow. We also find that traditionally disadvantaged groups in academia (non-White, junior, and non-native English speaking researchers) report higher LLM usage and perceived benefits, suggesting potential for improved research equity. However, women, non-binary, and senior researchers have greater ethical concerns, potentially hindering adoption.

@inproceedings{Liao2024LlmsAsResearch, author = {Liao, Zhehui and Antoniak, Maria and Cheong, Inyoung and Cheng, Evie Yu-Yen and Lee, Ai-Heng and Lo, Kyle and Chang, Joseph Chee and Zhang, Amy X}, booktitle = {COLM}, month = oct, title = {LLMs as Research Tools: A Large Scale Survey of Researchers' Usage and Perceptions}, url = {https://arxiv.org/abs/2411.05025}, year = {2025} } -

olmOCR 2: Unit Test Rewards for Document OCRJake Poznanski, Luca Soldaini, and Kyle LoArXiv, Oct 2025

olmOCR 2: Unit Test Rewards for Document OCRJake Poznanski, Luca Soldaini, and Kyle LoArXiv, Oct 2025We present olmOCR 2, the latest in our family of powerful OCR systems for converting digitized print documents, like PDFs, into clean, naturally ordered plain text. olmOCR 2 is powered by olmOCR-2-7B-1025, a specialized, 7B vision language model (VLM) trained using reinforcement learning with verifiable rewards (RLVR), where our rewards are a diverse set of binary unit tests. To scale unit test creation, we develop a pipeline for generating synthetic documents with diverse and challenging layouts, known ground-truth HTML source code, and extracted test cases. We show that RL training on these test cases results in state-of-the-art performance on olmOCR-Bench, our English-language OCR benchmark, with the largest improvements in math formula conversion, table parsing, and multi-column layouts compared to previous versions. We release our model, data and code under permissive open licenses.

@article{Poznanski2025OlmocrUnit, author = {Poznanski, Jake and Soldaini, Luca and Lo, Kyle}, journal = {ArXiv}, month = oct, title = {olmOCR 2: Unit Test Rewards for Document OCR}, url = {https://arxiv.org/abs/2510.19817}, volume = {2510.19817}, year = {2025} } -

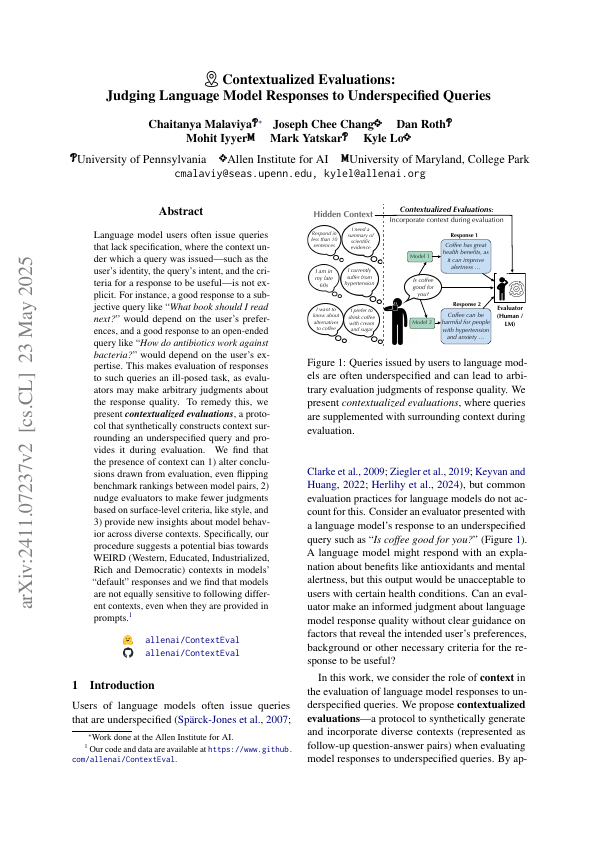

Contextualized evaluations: Judging language model responses to underspecified queriesChaitanya Malaviya, Joseph Chee Chang, Dan Roth, Mohit Iyyer, Mark Yatskar, and Kyle LoTransactions of ACL (TACL), Jul 2025

Contextualized evaluations: Judging language model responses to underspecified queriesChaitanya Malaviya, Joseph Chee Chang, Dan Roth, Mohit Iyyer, Mark Yatskar, and Kyle LoTransactions of ACL (TACL), Jul 2025Language model users often issue queries that lack specification, where the context under which a query was issued – such as the user’s identity, the query’s intent, and the criteria for a response to be useful – is not explicit. For instance, a good response to a subjective query like “What book should I read next?” would depend on the user’s preferences, and a good response to an open-ended query like “How do antibiotics work against bacteria?” would depend on the user’s expertise. This makes evaluation of responses to such queries an ill-posed task, as evaluators may make arbitrary judgments about the response quality. To remedy this, we present contextualized evaluations, a protocol that synthetically constructs context surrounding an underspecified query and provides it during evaluation. We find that the presence of context can 1) alter conclusions drawn from evaluation, even flipping benchmark rankings between model pairs, 2) nudge evaluators to make fewer judgments based on surface-level criteria, like style, and 3) provide new insights about model behavior across diverse contexts. Specifically, our procedure suggests a potential bias towards WEIRD (Western, Educated, Industrialized, Rich and Democratic) contexts in models’ “default” responses and we find that models are not equally sensitive to following different contexts, even when they are provided in prompts.

@article{Malaviya2025ContextualizedEvaluationsJudging, author = {Malaviya, Chaitanya and Chang, Joseph Chee and Roth, Dan and Iyyer, Mohit and Yatskar, Mark and Lo, Kyle}, doi = {10.1162/TACL.a.24}, journal = {Transactions of ACL (TACL)}, month = jul, title = {Contextualized evaluations: Judging language model responses to underspecified queries}, url = {https://direct.mit.edu/tacl/article/doi/10.1162/TACL.a.24/132120/}, volume = {13}, year = {2025} } -

Organize the Web: Constructing Domains Enhances Pre-Training Data CurationAlexander Wettig, Kyle Lo, Sewon Min, Hannaneh Hajishirzi, Danqi Chen, and Luca SoldainiIn ICML, Jul 2025

Organize the Web: Constructing Domains Enhances Pre-Training Data CurationAlexander Wettig, Kyle Lo, Sewon Min, Hannaneh Hajishirzi, Danqi Chen, and Luca SoldainiIn ICML, Jul 2025Modern language models are trained on large, unstructured datasets consisting of trillions of tokens and obtained by crawling the web. The unstructured nature makes it difficult to reason about their contents and develop systematic approaches to data curation. In this paper, we unpack monolithic web corpora by developing taxonomies of their contents and organizing them into domains. We introduce WebOrganizer, a framework for organizing web pages in terms of both their topic and format. Using these two complementary notions of domains, we automatically annotate pre-training data by distilling annotations from a large language model into efficient classifiers. This allows us to study how data from different domains should be mixed to improve models on downstream tasks, and we show that we can combine insights about effective topics and formats to further boost performance. We demonstrate that our domain mixing also improves existing methods that select data based on quality. Furthermore, we study and compare how quality-based methods will implicitly change the domain mixture. Overall, our work demonstrates that constructing and mixing domains provides a valuable complement to quality-based data curation methods, opening new avenues for effective and insightful pre-training data curation.

@inproceedings{Wettig2025OrganizeWeb, author = {Wettig, Alexander and Lo, Kyle and Min, Sewon and Hajishirzi, Hannaneh and Chen, Danqi and Soldaini, Luca}, booktitle = {ICML}, month = jul, title = {Organize the Web: Constructing Domains Enhances Pre-Training Data Curation}, url = {https://openreview.net/forum?id=boSqwdvJVC}, year = {2025} } -

Human-AI Collaboration: How AIs Augment Human TeammatesSherry Wu, Diyi Yang, Joseph Z Chang, Marti A Hearst, and Kyle LoIn ACL, Jul 2025

Human-AI Collaboration: How AIs Augment Human TeammatesSherry Wu, Diyi Yang, Joseph Z Chang, Marti A Hearst, and Kyle LoIn ACL, Jul 2025The continuous, rapid development of general-purpose models like LLMs suggests the theoretical possibility of AI performing any human task. Yet, despite the potential and promise, these models are far from perfect, excelling at certain tasks while struggling with others. The tension between what is possible and a model’s limitations raises the general research question that has attracted attention from various disciplines: What is the best way to use AI to maximize its benefits? In this tutorial, we will review recent developments related to human-AI teaming and collaboration. To the best of our knowledge, our tutorial will be the first to provide a more integrated view from NLP, HCI, Computational Social Science, and Learning Science, etc., and highlight how different communities have identified the goals and societal impacts of such collaborations, both positive and negative. We will further discuss how to operationalize these Human-AI collaboration goals, and reflect on how state-of-the-art AI models should be evaluated and scaffolded to make them most useful in collaborative contexts.

@inproceedings{Wu2025HumanaiCollaborationHow, author = {Wu, Sherry and Yang, Diyi and Chang, Joseph Z and Hearst, Marti A and Lo, Kyle}, booktitle = {ACL}, doi = {10.18653/v1/2025.acl-tutorials.4}, month = jul, needs_review = {true}, title = {Human-AI Collaboration: How AIs Augment Human Teammates}, url = {https://aclanthology.org/2025.acl-tutorials.4/}, year = {2025} } -

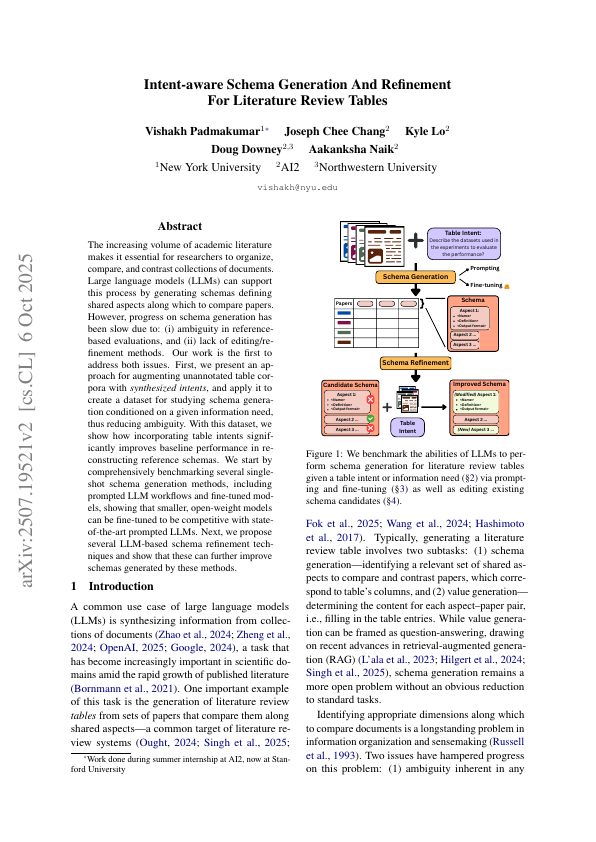

Setting The Table with Intent: Intent-aware Schema Generation and Editing for Literature Review TablesVishakh Padmakumar, Joseph Chee Chang, Kyle Lo, Doug Downey, and Aakanksha NaikArXiv, Jul 2025

Setting The Table with Intent: Intent-aware Schema Generation and Editing for Literature Review TablesVishakh Padmakumar, Joseph Chee Chang, Kyle Lo, Doug Downey, and Aakanksha NaikArXiv, Jul 2025The increasing volume of academic literature makes it essential for researchers to organize, compare, and contrast collections of documents. Large language models (LLMs) can support this process by generating schemas defining shared aspects along which to compare papers. However, progress on schema generation has been slow due to: (i) ambiguity in reference-based evaluations, and (ii) lack of editing/refinement methods. Our work is the first to address both issues. First, we present an approach for augmenting unannotated table corpora with synthesized intents, and apply it to create a dataset for studying schema generation conditioned on a given information need, thus reducing ambiguity. With this dataset, we show how incorporating table intents significantly improves baseline performance in reconstructing reference schemas. We start by comprehensively benchmarking several single-shot schema generation methods, including prompted LLM workflows and fine-tuned models, showing that smaller, open-weight models can be fine-tuned to be competitive with state-of-the-art prompted LLMs. Next, we propose several LLM-based schema refinement techniques and show that these can further improve schemas generated by these methods.

@article{Padmakumar2025SettingTable, author = {Padmakumar, Vishakh and Chang, Joseph Chee and Lo, Kyle and Downey, Doug and Naik, Aakanksha}, journal = {ArXiv}, month = jul, title = {Setting The Table with Intent: Intent-aware Schema Generation and Editing for Literature Review Tables}, url = {https://ui.adsabs.harvard.edu/abs/2025arXiv250719521P/abstract}, volume = {2507.19521}, year = {2025} } -

Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Vision-Language ModelsMatt Deitke, Christopher Clark, Sangho Lee, Rohun Tripathi, Yue Yang, Jae Sung Park, Mohammadreza Salehi, and 43 more authorsIn CVPR, Jun 2025

Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Vision-Language ModelsMatt Deitke, Christopher Clark, Sangho Lee, Rohun Tripathi, Yue Yang, Jae Sung Park, Mohammadreza Salehi, and 43 more authorsIn CVPR, Jun 2025Best Paper Honorable Mention

Today’s most advanced vision-language models (VLMs) remain proprietary. The strongest open-weight models rely heavily on synthetic data from proprietary VLMs to achieve good performance, effectively distilling these closed VLMs into open ones. As a result, the community has been missing foundational knowledge about how to build performant VLMs from scratch. We present Molmo, a new family of VLMs that are state-of-the-art in their class of openness. Our key contribution is a collection of new datasets called PixMo, including a dataset of highly detailed image captions for pre-training, a free-form image Q&A dataset for fine-tuning, and an innovative 2D pointing dataset, all collected without the use of external VLMs. The success of our approach relies on careful modeling choices, a well-tuned training pipeline, and, most critically, the quality of our newly collected datasets. Our best-in-class 72B model not only outperforms others in the class of open weight and data models, but also outperforms larger proprietary models including Claude 3.5 Sonnet, and Gemini 1.5 Pro and Flash, second only to GPT-4o based on both academic benchmarks and on a large human evaluation. Our model weights, new datasets, and source code are available at https://molmo.allenai.org/blog.

@inproceedings{Deitke2025MolmoAndPixmo, author = {Deitke, Matt and Clark, Christopher and Lee, Sangho and Tripathi, Rohun and Yang, Yue and Park, Jae Sung and Salehi, Mohammadreza and Muennighoff, Niklas and Lo, Kyle and Soldaini, Luca and Lu, Jiasen and Anderson, Taira and Bransom, Erin and Ehsani, Kiana and Ngo, Huong and Chen, YenSung and Patel, Ajay and Yatskar, Mark and Callison-Burch, Chris and Head, Andrew and Hendrix, Rose and Bastani, Favyen and VanderBilt, Eli and Lambert, Nathan and Chou, Yvonne and Chheda, Arnavi and Sparks, Jenna and Skjonsberg, Sam and Schmitz, Michael and Sarnat, Aaron and Bischoff, Byron and Walsh, Pete and Newell, Chris and Wolters, Piper and Gupta, Tanmay and Zeng, Kuo-Hao and Borchardt, Jon and Groeneveld, Dirk and Nam, Crystal and Lebrecht, Sophie and Wittlif, Caitlin and Schoenick, Carissa and Michel, Oscar and Krishna, Ranjay and Weihs, Luca and Smith, Noah A and Hajishirzi, Hannaneh and Girshick, Ross and Farhadi, Ali and Kembhavi, Aniruddha}, booktitle = {CVPR}, doi = {10.1109/CVPR52734.2025.00018}, month = jun, title = {Molmo and PixMo: Open Weights and Open Data for State-of-the-Art Vision-Language Models}, url = {http://openaccess.thecvf.com/content/CVPR2025/html/Deitke_Molmo_and_PixMo_Open_Weights_and_Open_Data_for_State-of-the-Art_CVPR_2025_paper.html}, year = {2025} } -

DrawEduMath: Evaluating Vision Language Models with Expert-Annotated Students’ Hand-Drawn Math ImagesSami Baral, Li Lucy, Ryan Knight, Alice Ng, Luca Soldaini, Neil T Heffernan, and Kyle LoIn NAACL, Apr 2025

DrawEduMath: Evaluating Vision Language Models with Expert-Annotated Students’ Hand-Drawn Math ImagesSami Baral, Li Lucy, Ryan Knight, Alice Ng, Luca Soldaini, Neil T Heffernan, and Kyle LoIn NAACL, Apr 2025Outstanding Paper Award

In real-world settings, vision language models (VLMs) should robustly handle naturalistic, noisy visual content as well as domain-specific language and concepts. For example, K-12 educators using digital learning platforms may need to examine and provide feedback across many images of students’ math work. To assess the potential of VLMs to support educators in settings like this one, we introduce DrawEduMath, an English-language dataset of 2,030 images of students’ handwritten responses to K-12 math problems. Teachers provided detailed annotations, including free-form descriptions of each image and 11,661 question-answer (QA) pairs. These annotations capture a wealth of pedagogical insights, ranging from students’ problem-solving strategies to the composition of their drawings, diagrams, and writing. We evaluate VLMs on teachers’ QA pairs, as well as 44,362 synthetic QA pairs derived from teachers’ descriptions using language models (LMs). We show that even state-of-the-art VLMs leave much room for improvement on DrawEduMath questions. We also find that synthetic QAs, though imperfect, can yield similar model rankings as teacher-written QAs. We release DrawEduMath to support the evaluation of VLMs’ abilities to reason mathematically over images gathered with educational contexts in mind.

@inproceedings{Baral2025DrawedumathEvaluatingVision, author = {Baral, Sami and Lucy, Li and Knight, Ryan and Ng, Alice and Soldaini, Luca and Heffernan, Neil T and Lo, Kyle}, booktitle = {NAACL}, doi = {10.18653/v1/2025.naacl-long.352}, month = apr, title = {DrawEduMath: Evaluating Vision Language Models with Expert-Annotated Students' Hand-Drawn Math Images}, url = {https://aclanthology.org/2025.naacl-long.352/}, year = {2025} } -

RouterRetriever: Routing over a Mixture of Expert Embedding ModelsHyunji Lee, Luca Soldaini, Arman Cohan, Minjoon Seo, and Kyle LoIn AAAI, Apr 2025

RouterRetriever: Routing over a Mixture of Expert Embedding ModelsHyunji Lee, Luca Soldaini, Arman Cohan, Minjoon Seo, and Kyle LoIn AAAI, Apr 2025Information retrieval methods often rely on a single embedding model trained on large, general-domain datasets like MSMARCO. While this approach can produce a retriever with reasonable overall performance, they often underperform models trained on domain-specific data when testing on their respective domains. Prior work in information retrieval has tackled this through multi-task training, but the idea of routing over a mixture of domain-specific expert retrievers remains unexplored despite the popularity of such ideas in language model generation research. In this work, we introduce RouterRetriever, a retrieval model that leverages a mixture of domain-specific experts by using a routing mechanism to select the most appropriate expert for each query. RouterRetriever is lightweight and allows easy addition or removal of experts without additional training. Evaluation on the BEIR benchmark demonstrates that RouterRetriever outperforms both models trained on MSMARCO (+2.1 absolute nDCG@10) and multi-task models (+3.2). This is achieved by employing our routing mechanism, which surpasses other routing techniques (+1.8 on average) commonly used in language modeling. Furthermore, the benefit generalizes well to other datasets, even in the absence of a specific expert on the dataset. RouterRetriever is the first work to demonstrate the advantages of routing over a mixture of domain-specific expert embedding models as an alternative to a single, general-purpose embedding model, especially when retrieving from diverse, specialized domains.

@inproceedings{Lee2024RouterRetrieverET, author = {Lee, Hyunji and Soldaini, Luca and Cohan, Arman and Seo, Minjoon and Lo, Kyle}, booktitle = {AAAI}, doi = {10.1609/aaai.v39i11.33306}, month = apr, title = {RouterRetriever: Routing over a Mixture of Expert Embedding Models}, url = {https://arxiv.org/abs/2409.02685}, volume = {2409.02685}, year = {2025} } -

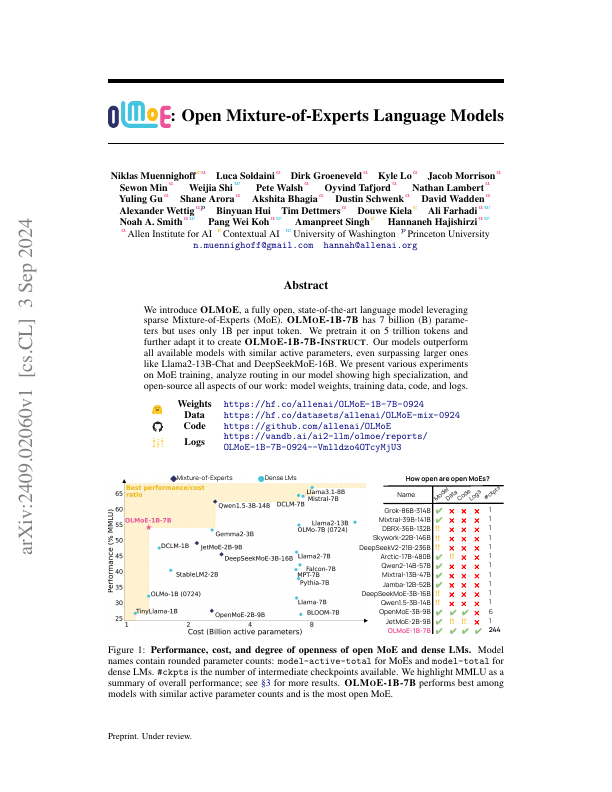

Olmoe: Open mixture-of-experts language modelsNiklas Muennighoff, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Jacob Morrison, Sewon Min, Weijia Shi, and 17 more authorsIn ICLR, Apr 2025

Olmoe: Open mixture-of-experts language modelsNiklas Muennighoff, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Jacob Morrison, Sewon Min, Weijia Shi, and 17 more authorsIn ICLR, Apr 2025We introduce OLMoE, a fully open, state-of-the-art language model leveraging sparse Mixture-of-Experts (MoE). OLMoE-1B-7B has 7 billion (B) parameters but uses only 1B per input token. We pretrain it on 5 trillion tokens and further adapt it to create OLMoE-1B-7B-Instruct. Our models outperform all available models with similar active parameters, even surpassing larger ones like Llama2-13B-Chat and DeepSeekMoE-16B. We present novel findings on MoE training, define and analyze new routing properties showing high specialization in our model, and open-source all our work: model weights, training data, code, and logs.

@inproceedings{Muennighoff2024OLMoEOM, author = {Muennighoff, Niklas and Soldaini, Luca and Groeneveld, Dirk and Lo, Kyle and Morrison, Jacob and Min, Sewon and Shi, Weijia and Walsh, Pete and Tafjord, Oyvind and Lambert, Nathan and Gu, Yuling and Arora, Shane and Bhagia, Akshita and Schwenk, Dustin and Wadden, David and Wettig, Alexander and Hui, Binyuan and Dettmers, Tim and Kiela, Douwe and Farhadi, Ali and Smith, Noah A and Koh, Pang Wei and Singh, Amanpreet and Hajishirzi, Hannaneh}, booktitle = {ICLR}, month = apr, title = {Olmoe: Open mixture-of-experts language models}, url = {https://openreview.net/forum?id=xXTkbTBmqq}, year = {2025} } -

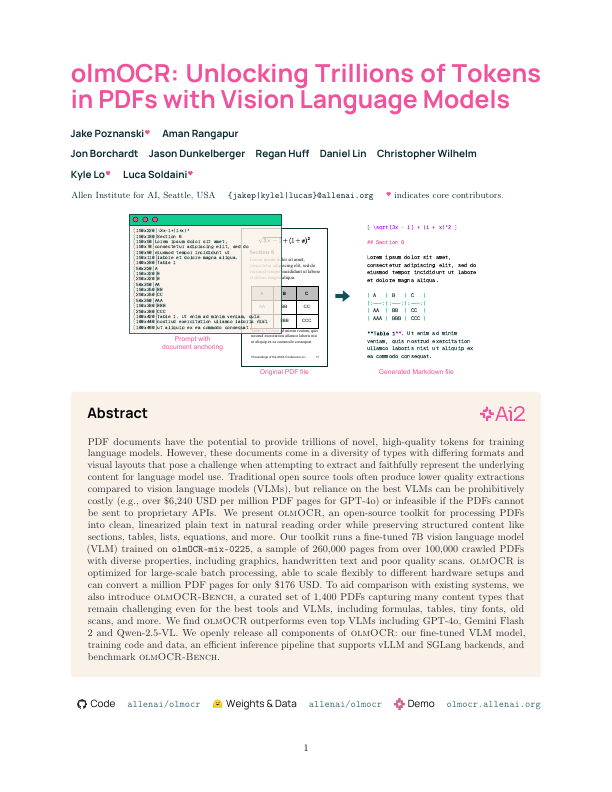

olmOCR: Unlocking Trillions of Tokens in PDFs with Vision Language ModelsJake Poznanski, Aman Rangapur, Jon Borchardt, Jason Dunkelberger, Regan Huff, Daniel Lin, Christopher Wilhelm, and 2 more authorsArXiv, Feb 2025

olmOCR: Unlocking Trillions of Tokens in PDFs with Vision Language ModelsJake Poznanski, Aman Rangapur, Jon Borchardt, Jason Dunkelberger, Regan Huff, Daniel Lin, Christopher Wilhelm, and 2 more authorsArXiv, Feb 2025PDF documents have the potential to provide trillions of novel, high-quality tokens for training language models. However, these documents come in a diversity of types with differing formats and visual layouts that pose a challenge when attempting to extract and faithfully represent the underlying content for language model use. Traditional open source tools often produce lower quality extractions compared to vision language models (VLMs), but reliance on the best VLMs can be prohibitively costly (e.g., over 6,240 USD per million PDF pages for GPT-4o) or infeasible if the PDFs cannot be sent to proprietary APIs. We present olmOCR, an open-source toolkit for processing PDFs into clean, linearized plain text in natural reading order while preserving structured content like sections, tables, lists, equations, and more. Our toolkit runs a fine-tuned 7B vision language model (VLM) trained on olmOCR-mix-0225, a sample of 260,000 pages from over 100,000 crawled PDFs with diverse properties, including graphics, handwritten text and poor quality scans. olmOCR is optimized for large-scale batch processing, able to scale flexibly to different hardware setups and can convert a million PDF pages for only 176 USD. To aid comparison with existing systems, we also introduce olmOCR-Bench, a curated set of 1,400 PDFs capturing many content types that remain challenging even for the best tools and VLMs, including formulas, tables, tiny fonts, old scans, and more. We find olmOCR outperforms even top VLMs including GPT-4o, Gemini Flash 2 and Qwen-2.5-VL. We openly release all components of olmOCR: our fine-tuned VLM model, training code and data, an efficient inference pipeline that supports vLLM and SGLang backends, and benchmark olmOCR-Bench.

@article{Poznanski2025OlmocrUnlockingTrillions, author = {Poznanski, Jake and Rangapur, Aman and Borchardt, Jon and Dunkelberger, Jason and Huff, Regan and Lin, Daniel and Wilhelm, Christopher and Lo, Kyle and Soldaini, Luca}, journal = {ArXiv}, month = feb, title = {olmOCR: Unlocking Trillions of Tokens in PDFs with Vision Language Models}, url = {https://arxiv.org/abs/2502.18443}, volume = {2502.18443}, year = {2025} } -

Automatic detection of research values from scientific abstracts across computer science subfieldsHang Jiang, Tal August, Luca Soldaini, Kyle Lo, and Maria AntoniakArXiv, Feb 2025

Automatic detection of research values from scientific abstracts across computer science subfieldsHang Jiang, Tal August, Luca Soldaini, Kyle Lo, and Maria AntoniakArXiv, Feb 2025The field of Computer science (CS) has rapidly evolved over the past few decades, providing computational tools and methodologies to various fields and forming new interdisciplinary communities. This growth in CS has significantly impacted institutional practices and relevant research communities. Therefore, it is crucial to explore what specific research values, known as basic and fundamental beliefs that guide or motivate research attitudes or actions, CS-related research communities promote. Prior research has manually analyzed research values from a small sample of machine learning papers. No prior work has studied the automatic detection of research values in CS from large-scale scientific texts across different research subfields. This paper introduces a detailed annotation scheme featuring ten research values that guide CS-related research. Based on the scheme, we build value classifiers to scale up the analysis and present a systematic study over 226,600 paper abstracts from 32 CS-related subfields and 86 popular publishing venues over ten years.

@article{Jiang2025AutomaticDetectionOf, author = {Jiang, Hang and August, Tal and Soldaini, Luca and Lo, Kyle and Antoniak, Maria}, journal = {ArXiv}, month = feb, title = {Automatic detection of research values from scientific abstracts across computer science subfields}, url = {https://arxiv.org/abs/2502.16390}, volume = {2502.16390}, year = {2025} }

2024

-

ArxivDIGESTables: Synthesizing Scientific Literature into Tables using Language ModelsBenjamin Newman, Yoonjoo Lee, Aakanksha Naik, Pao Siangliulue, Raymond Fok, Juho Kim, Daniel S Weld, and 2 more authorsIn EMNLP, Nov 2024

ArxivDIGESTables: Synthesizing Scientific Literature into Tables using Language ModelsBenjamin Newman, Yoonjoo Lee, Aakanksha Naik, Pao Siangliulue, Raymond Fok, Juho Kim, Daniel S Weld, and 2 more authorsIn EMNLP, Nov 2024When conducting literature reviews, scientists often create literature review tables - tables whose rows are publications and whose columns constitute a schema, a set of aspects used to compare and contrast the papers. Can we automatically generate these tables using language models (LMs)? In this work, we introduce a framework that leverages LMs to perform this task by decomposing it into separate schema and value generation steps. To enable experimentation, we address two main challenges: First, we overcome a lack of high-quality datasets to benchmark table generation by curating and releasing arxivDIGESTables, a new dataset of 2,228 literature review tables extracted from ArXiv papers that synthesize a total of 7,542 research papers. Second, to support scalable evaluation of model generations against human-authored reference tables, we develop DecontextEval, an automatic evaluation method that aligns elements of tables with the same underlying aspects despite differing surface forms. Given these tools, we evaluate LMs’ abilities to reconstruct reference tables, finding this task benefits from additional context to ground the generation (e.g. table captions, in-text references). Finally, through a human evaluation study we find that even when LMs fail to fully reconstruct a reference table, their generated novel aspects can still be useful.

@inproceedings{Newman2024ArxivdigestablesSynthesizingScientific, author = {Newman, Benjamin and Lee, Yoonjoo and Naik, Aakanksha and Siangliulue, Pao and Fok, Raymond and Kim, Juho and Weld, Daniel S and Chang, Joseph Chee and Lo, Kyle}, booktitle = {EMNLP}, doi = {10.18653/v1/2024.emnlp-main.538}, month = nov, title = {ArxivDIGESTables: Synthesizing Scientific Literature into Tables using Language Models}, url = {https://aclanthology.org/2024.emnlp-main.538}, year = {2024} } -

2 OLMo 2 FuriousTeam OLMo, Pete Walsh, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Shane Arora, Akshita Bhagia, and 33 more authorsIn COLM, Oct 2024

2 OLMo 2 FuriousTeam OLMo, Pete Walsh, Luca Soldaini, Dirk Groeneveld, Kyle Lo, Shane Arora, Akshita Bhagia, and 33 more authorsIn COLM, Oct 2024We present OLMo 2, the next generation of our fully open language models. OLMo 2 includes dense autoregressive models with improved architecture and training recipe, pretraining data mixtures, and instruction tuning recipes. Our modified model architecture and training recipe achieve both better training stability and improved per-token efficiency. Our updated pretraining data mixture introduces a new, specialized data mix called Dolmino Mix 1124, which significantly improves model capabilities across many downstream task benchmarks when introduced via late-stage curriculum training (i.e. specialized data during the annealing phase of pretraining). Finally, we incorporate best practices from Tülu 3 to develop OLMo 2-Instruct, focusing on permissive data and extending our final-stage reinforcement learning with verifiable rewards (RLVR). Our OLMo 2 base models sit at the Pareto frontier of performance to compute, often matching or outperforming open-weight only models like Llama 3.1 and Qwen 2.5 while using fewer FLOPs and with fully transparent training data, code, and recipe. Our fully open OLMo 2-Instruct models are competitive with or surpassing open-weight only models of comparable size, including Qwen 2.5, Llama 3.1 and Gemma 2. We release all OLMo 2 artifacts openly – models at 7B and 13B scales, both pretrained and post-trained, including their full training data, training code and recipes, training logs and thousands of intermediate checkpoints. The final instruction model is available on the Ai2 Playground as a free research demo.

@inproceedings{OLMo2024Olmo, author = {OLMo, Team and Walsh, Pete and Soldaini, Luca and Groeneveld, Dirk and Lo, Kyle and Arora, Shane and Bhagia, Akshita and Gu, Yuling and Huang, Shengyi and Jordan, Matt and Lambert, Nathan and Schwenk, Dustin and Tafjord, Oyvind and Anderson, Taira and Atkinson, David and Brahman, Faeze and Clark, Christopher and Dasigi, Pradeep and Dziri, Nouha and Guerquin, Michal and Ivison, Hamish and Koh, Pang Wei and Liu, Jiacheng and Malik, Saumya and Merrill, William and Miranda, Lester James V and Morrison, Jacob and Murray, Tyler and Nam, Crystal and Pyatkin, Valentina and Rangapur, Aman and Schmitz, Michael and Skjonsberg, Sam and Wadden, David and Wilhelm, Christopher and Wilson, Michael and Zettlemoyer, Luke and Farhadi, Ali and Smith, Noah A and Hajishirzi, Hannaneh}, booktitle = {COLM}, month = oct, cv_authors_after = {Team OLMo}, cv_authors_before = {Team OLMo}, title = {2 OLMo 2 Furious}, url = {https://arxiv.org/abs/2501.00656}, year = {2024} } -

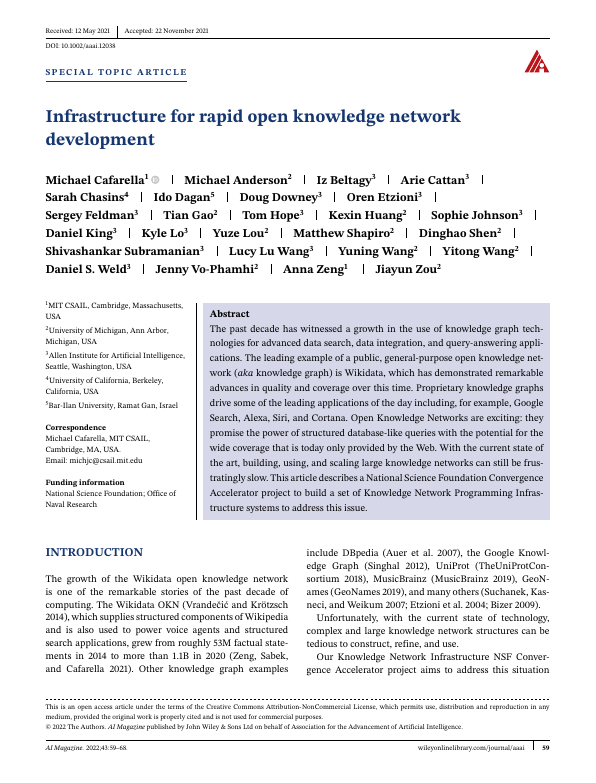

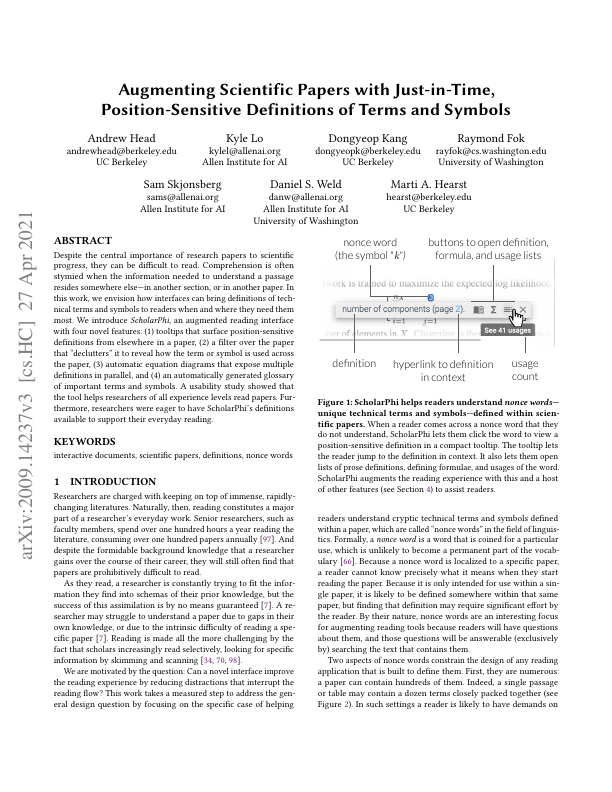

The Semantic Reader Project: Augmenting Scholarly Documents Through AI-Powered Interactive Reading InterfacesKyle Lo, Joseph Chee Chang, Andrew Head, Jonathan Bragg, Amy X. Zhang, Cassidy Trier, Chloe Anastasiades, and 48 more authorsCommunications of the ACM, Sep 2024

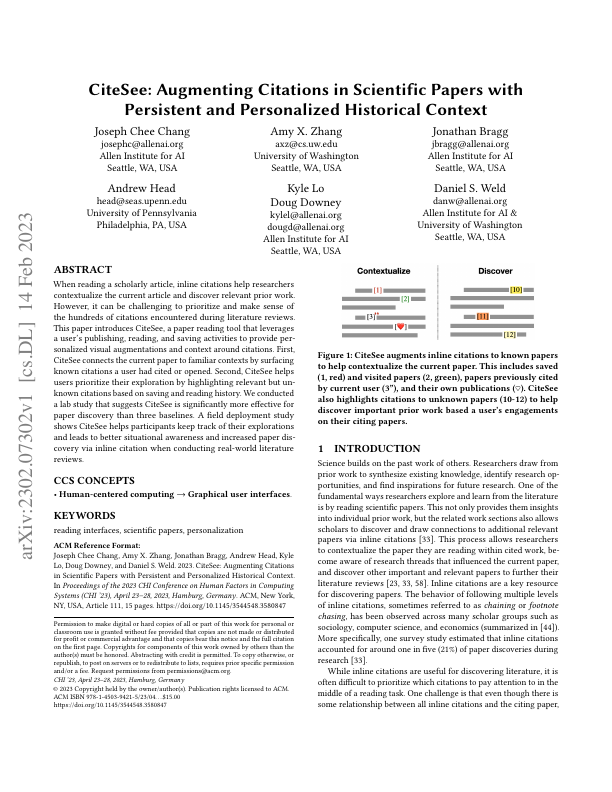

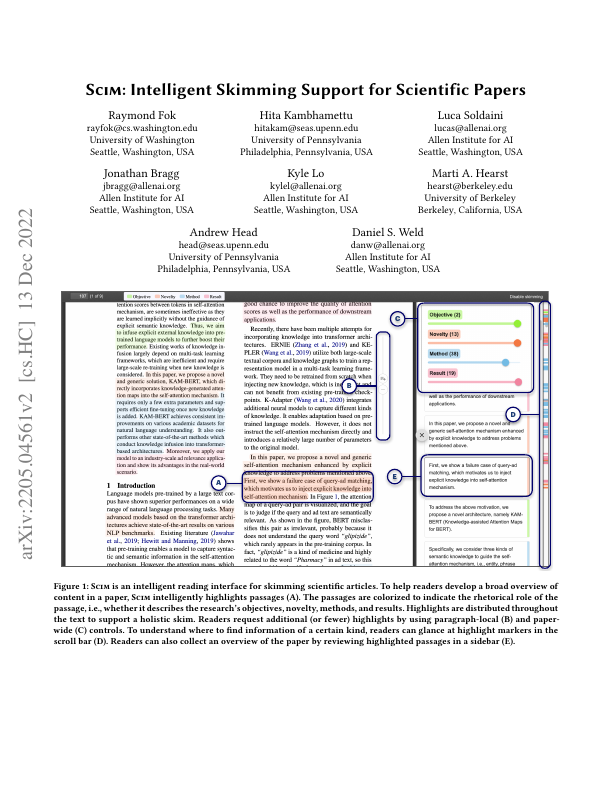

The Semantic Reader Project: Augmenting Scholarly Documents Through AI-Powered Interactive Reading InterfacesKyle Lo, Joseph Chee Chang, Andrew Head, Jonathan Bragg, Amy X. Zhang, Cassidy Trier, Chloe Anastasiades, and 48 more authorsCommunications of the ACM, Sep 2024Scholarly publications are key to the transfer of knowledge from scholars to others. However, research papers are information-dense, and as the volume of the scientific literature grows, the greater the need for new technology to support scholars. In contrast to the process of finding papers, which has been transformed by Internet technology, the experience of reading research papers has changed little in decades. For instance, the PDF format for sharing papers remains widely used due to its portability but has significant downsides, inter alia, static content and poor accessibility for low-vision readers. This paper explores the question “Can recent advances in AI and HCI power intelligent, interactive, and accessible reading interfaces—even for legacy PDFs?” We describe the Semantic Reader Project, a collaborative effort across multiple institutions to explore automatic creation of dynamic reading interfaces for research papers. Through this project, we’ve developed a collection of novel reading interfaces and evaluated them with study participants and real-world users to show improved reading experiences for scholars. We’ve also released a production research paper reading interface that will continuously incorporate novel features from our research as they mature. We structure this paper around five key opportunities for AI assistance in scholarly reading —discovery, efficiency, comprehension, synthesis, and accessibility—and present an overview of our progress and discuss remaining open challenges.Augmenting scholarly documents through AI-powered interactive reading interfaces.

@article{Lo2024SemanticReaderProject, author = {Lo, Kyle and Chang, Joseph Chee and Head, Andrew and Bragg, Jonathan and Zhang, Amy X. and Trier, Cassidy and Anastasiades, Chloe and August, Tal and Authur, Russell and Bragg, Danielle and Bransom, Erin and Cachola, Isabel and Candra, Stefan and Chandrasekhar, Yoganand and Chen, Yen-Sung and Cheng, Evie Yu-Yen and Chou, Yvonne and Downey, Doug and Evans, Rob and Fok, Raymond and Hu, Fangzhou and Huff, Regan and Kang, Dongyeop and Kim, Tae Soo and Kinney, Rodney and Kittur, Aniket and Kang, Hyeonsu B. and Klevak, Egor and Kuehl, Bailey and Langan, Michael J. and Latzke, Matt and Lochner, Jaron and MacMillan, Kelsey and Marsh, Eric and Murray, Tyler and Naik, Aakanksha and Nguyen, Ngoc-Uyen and Palani, Srishti and Park, Soya and Paulic, Caroline and Rachatasumrit, Napol and Rao, Smita and Sayre, Paul and Shen, Zejiang and Siangliulue, Pao and Soldaini, Luca and Tran, Huy and van Zuylen, Madeleine and Wang, Lucy Lu and Wilhelm, Christopher and Wu, Caroline and Yang, Jiangjiang and Zamarron, Angele and Hearst, Marti A. and Weld, Daniel S.}, doi = {10.1145/3659096}, journal = {Communications of the ACM}, month = sep, cv_authors_after = {Cassidy Trier}, cv_authors_before = {Marti Hearst}, title = {The Semantic Reader Project: Augmenting Scholarly Documents Through AI-Powered Interactive Reading Interfaces}, url = {https://doi.org/10.1145/3659096}, volume = {67}, year = {2024} } -

OLMo: Accelerating the Science of Language ModelsDirk Groeneveld, Iz Beltagy, Evan Walsh, Akshita Bhagia, Rodney Kinney, Oyvind Tafjord, Ananya Jha, and 36 more authorsIn ACL, Aug 2024

OLMo: Accelerating the Science of Language ModelsDirk Groeneveld, Iz Beltagy, Evan Walsh, Akshita Bhagia, Rodney Kinney, Oyvind Tafjord, Ananya Jha, and 36 more authorsIn ACL, Aug 2024Best Paper Award

Language models (LMs) have become ubiquitous in both NLP research and in commercial product offerings. As their commercial importance has surged, the most powerful models have become closed off, gated behind proprietary interfaces, with important details of their training data, architectures, and development undisclosed. Given the importance of these details in scientifically studying these models, including their biases and potential risks, we believe it is essential for the research community to have access to powerful, truly open LMs. To this end, we have built OLMo, a competitive, truly Open Language Model, to enable the scientific study of language models. Unlike most prior efforts that have only released model weights and inference code, we release OLMo alongside open training data and training and evaluation code. We hope this release will empower the open research community and inspire a new wave of innovation.

@inproceedings{Groeneveld2024OlmoAcceleratingScience, author = {Groeneveld, Dirk and Beltagy, Iz and Walsh, Evan and Bhagia, Akshita and Kinney, Rodney and Tafjord, Oyvind and Jha, Ananya and Ivison, Hamish and Magnusson, Ian and Wang, Yizhong and Arora, Shane and Atkinson, David and Authur, Russell and Chandu, Khyathi and Cohan, Arman and Dumas, Jennifer and Elazar, Yanai and Gu, Yuling and Hessel, Jack and Khot, Tushar and Merrill, William and Morrison, Jacob and Muennighoff, Niklas and Naik, Aakanksha and Nam, Crystal and Peters, Matthew and Pyatkin, Valentina and Ravichander, Abhilasha and Schwenk, Dustin and Shah, Saurabh and Smith, William and Strubell, Emma and Subramani, Nishant and Wortsman, Mitchell and Dasigi, Pradeep and Lambert, Nathan and Richardson, Kyle and Zettlemoyer, Luke and Dodge, Jesse and Lo, Kyle and Soldaini, Luca and Smith, Noah and Hajishirzi, Hannaneh}, booktitle = {ACL}, doi = {10.18653/v1/2024.acl-long.841}, month = aug, cv_authors_after = {Oyvind Tafjord}, cv_authors_before = {Pradeep Dasigi}, title = {{OLM}o: Accelerating the Science of Language Models}, url = {https://aclanthology.org/2024.acl-long.841}, year = {2024} } -

MathFish : Evaluating Language Model Math Reasoning via Grounding in Educational CurriculaLi Lucy, Tal August, Rose E. Wang, Luca Soldaini, Courtney Allison, and Kyle LoArXiv, Aug 2024

MathFish : Evaluating Language Model Math Reasoning via Grounding in Educational CurriculaLi Lucy, Tal August, Rose E. Wang, Luca Soldaini, Courtney Allison, and Kyle LoArXiv, Aug 2024To ensure that math curriculum is grade-appropriate and aligns with critical skills or concepts in accordance with educational standards, pedagogical experts can spend months carefully reviewing published math problems. Drawing inspiration from this process, our work presents a novel angle for evaluating language models’ (LMs) mathematical abilities, by investigating whether they can discern skills and concepts enabled by math content. We contribute two datasets: one consisting of 385 fine-grained descriptions of K-12 math skills and concepts, or standards, from Achieve the Core (ATC), and another of 9.9K math problems labeled with these standards (MathFish). We develop two tasks for evaluating LMs’ abilities to assess math problems: (1) verifying whether a problem aligns with a given standard, and (2) tagging a problem with all aligned standards. Working with experienced teachers, we find that LMs struggle to tag and verify standards linked to problems, and instead predict labels that are close to ground truth, but differ in subtle ways. We also show that LMs often generate problems that do not fully align with standards described in prompts, suggesting the need for careful scrutiny on use cases involving LMs for generating curricular materials. Finally, we categorize problems in GSM8k using math standards, allowing us to better understand why some problems are more difficult to solve for models than others.

@article{Lucy2024EvaluatingLM, author = {Lucy, Li and August, Tal and Wang, Rose E. and Soldaini, Luca and Allison, Courtney and Lo, Kyle}, journal = {ArXiv}, month = aug, title = {MathFish : Evaluating Language Model Math Reasoning via Grounding in Educational Curricula}, url = {https://arxiv.org/abs/2408.04226}, year = {2024} } -

Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining ResearchLuca Soldaini, Rodney Kinney, Akshita Bhagia, Dustin Schwenk, David Atkinson, Russell Authur, Ben Bogin, and 29 more authorsIn ACL, Aug 2024

Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining ResearchLuca Soldaini, Rodney Kinney, Akshita Bhagia, Dustin Schwenk, David Atkinson, Russell Authur, Ben Bogin, and 29 more authorsIn ACL, Aug 2024Best Paper Award

Information about pretraining corpora used to train the current best-performing language models is seldom discussed: commercial models rarely detail their data, and even open models are often released without accompanying training data or recipes to reproduce them. As a result, it is challenging to conduct and advance scientific research on language modeling, such as understanding how training data impacts model capabilities and limitations. To facilitate scientific research on language model pretraining, we curate and release Dolma, a three-trillion-token English corpus, built from a diverse mixture of web content, scientific papers, code, public-domain books, social media, and encyclopedic materials. We extensively document Dolma, including its design principles, details about its construction, and a summary of its contents. We present analyses and experimental results on intermediate states of Dolma to share what we have learned about important data curation practices. Finally, we open-source our data curation toolkit to enable reproduction of our work as well as support further research in large-scale data curation.

@inproceedings{Soldaini2024DolmaOpenCorpus, author = {Soldaini, Luca and Kinney, Rodney and Bhagia, Akshita and Schwenk, Dustin and Atkinson, David and Authur, Russell and Bogin, Ben and Chandu, Khyathi and Dumas, Jennifer and Elazar, Yanai and Hofmann, Valentin and Jha, Ananya and Kumar, Sachin and Lucy, Li and Lyu, Xinxi and Lambert, Nathan and Magnusson, Ian and Morrison, Jacob and Muennighoff, Niklas and Naik, Aakanksha and Nam, Crystal and Peters, Matthew and Ravichander, Abhilasha and Richardson, Kyle and Shen, Zejiang and Strubell, Emma and Subramani, Nishant and Tafjord, Oyvind and Walsh, Evan and Zettlemoyer, Luke and Smith, Noah and Hajishirzi, Hannaneh and Beltagy, Iz and Groeneveld, Dirk and Dodge, Jesse and Lo, Kyle}, booktitle = {ACL}, doi = {10.18653/v1/2024.acl-long.840}, month = aug, cv_authors_after = {Dustin Schwenk}, cv_authors_before = {Noah Smith}, title = {Dolma: an Open Corpus of Three Trillion Tokens for Language Model Pretraining Research}, url = {https://aclanthology.org/2024.acl-long.840}, year = {2024} } -

KIWI: A Dataset of Knowledge-Intensive Writing Instructions for Answering Research QuestionsFangyuan Xu, Kyle Lo, Luca Soldaini, Bailey Kuehl, Eunsol Choi, and David WaddenIn Findings of the Association for Computational Linguistics ACL 2024, Aug 2024

KIWI: A Dataset of Knowledge-Intensive Writing Instructions for Answering Research QuestionsFangyuan Xu, Kyle Lo, Luca Soldaini, Bailey Kuehl, Eunsol Choi, and David WaddenIn Findings of the Association for Computational Linguistics ACL 2024, Aug 2024Large language models (LLMs) adapted to follow user instructions are now widely deployed as conversational agents. In this work, we examine one increasingly common instruction-following task: providing writing assistance to compose a long-form answer. To evaluate the capabilities of current LLMs on this task, we construct KIWI, a dataset of knowledge-intensive writing instructions in the scientific domain. Given a research question, an initial model-generated answer and a set of relevant papers, an expert annotator iteratively issues instructions for the model to revise and improve its answer. We collect 1,260 interaction turns from 234 interaction sessions with three state-of-the-art LLMs. Each turn includes a user instruction, a model response, and a human evaluation of the model response. Through a detailed analysis of the collected responses, we find that all models struggle to incorporate new information into an existing answer, and to perform precise and unambiguous edits. Further, we find that models struggle to judge whether their outputs successfully followed user instructions, with accuracy at least 10 points short of human agreement. Our findings indicate that KIWI will be a valuable resource to measure progress and improve LLMs’ instruction-following capabilities for knowledge intensive writing tasks.

@inproceedings{Xu2024KiwiDatasetKnowledge, author = {Xu, Fangyuan and Lo, Kyle and Soldaini, Luca and Kuehl, Bailey and Choi, Eunsol and Wadden, David}, booktitle = {Findings of the Association for Computational Linguistics ACL 2024}, doi = {10.18653/v1/2024.findings-acl.770}, month = aug, title = {{KIWI}: A Dataset of Knowledge-Intensive Writing Instructions for Answering Research Questions}, url = {https://aclanthology.org/2024.findings-acl.770}, year = {2024} } -

DataComp-LM: In search of the next generation of training sets for language modelsJeffrey Li, Alex Fang, Georgios Smyrnis, Maor Ivgi, Matt Jordan, Samir Gadre, Hritik Bansal, and 52 more authorsIn NeurIPS (Datasets and Benchmarks), Dec 2024

DataComp-LM: In search of the next generation of training sets for language modelsJeffrey Li, Alex Fang, Georgios Smyrnis, Maor Ivgi, Matt Jordan, Samir Gadre, Hritik Bansal, and 52 more authorsIn NeurIPS (Datasets and Benchmarks), Dec 2024We introduce DataComp for Language Models (DCLM), a testbed for controlled dataset experiments with the goal of improving language models. As part of DCLM, we provide a standardized corpus of 240T tokens extracted from Common Crawl, effective pretraining recipes based on the OpenLM framework, and a broad suite of 53 downstream evaluations. Participants in the DCLM benchmark can experiment with data curation strategies such as deduplication, filtering, and data mixing at model scales ranging from 412M to 7B parameters. As a baseline for DCLM, we conduct extensive experiments and find that model-based filtering is key to assembling a high-quality training set. The resulting dataset, DCLM-Baseline enables training a 7B parameter language model from scratch to 64% 5-shot accuracy on MMLU with 2.6T training tokens. Compared to MAP-Neo, the previous state-of-the-art in open-data language models, DCLM-Baseline represents a 6.6 percentage point improvement on MMLU while being trained with 40% less compute. Our baseline model is also comparable to Mistral-7B-v0.3 and Llama 3 8B on MMLU (63% & 66%), and performs similarly on an average of 53 natural language understanding tasks while being trained with 6.6x less compute than Llama 3 8B. Our results highlight the importance of dataset design for training language models and offer a starting point for further research on data curation.

@inproceedings{Gadre2023DataCompIS, author = {Li, Jeffrey and Fang, Alex and Smyrnis, Georgios and Ivgi, Maor and Jordan, Matt and Gadre, Samir and Bansal, Hritik and Guha, Etash and Keh, Sedrick and Arora, Kushal and Garg, Saurabh and Xin, Rui and Muennighoff, Niklas and Heckel, Reinhard and Mercat, Jean and Chen, Mayee and Gururangan, Suchin and Wortsman, Mitchell and Albalak, Alon and Bitton, Yonatan and Nezhurina, Marianna and Abbas, Amro and Hsieh, Cheng-Yu and Ghosh, Dhruba and Gardner, Josh and Kilian, Maciej and Zhang, Hanlin and Shao, Rulin and Pratt, Sarah and Sanyal, Sunny and Ilharco, Gabriel and Daras, Giannis and Marathe, Kalyani and Gokaslan, Aaron and Zhang, Jieyu and Chandu, Khyathi and Nguyen, Thao and Vasiljevic, Igor and Kakade, Sham and Song, Shuran and Sanghavi, Sujay and Faghri, Fartash and Oh, Sewoong and Zettlemoyer, Luke and Lo, Kyle and El-Nouby, Alaaeldin and Pouransari, Hadi and Toshev, Alexander and Wang, Stephanie and Groeneveld, Dirk and Soldaini, Luca and Koh, Pang Wei and Jitsev, Jenia and Kollar, Thomas and Dimakis, Alexandros G. and Carmon, Yair and Dave, Achal and Schmidt, Ludwig and Shankar, Vaishaal}, booktitle = {NeurIPS (Datasets and Benchmarks)}, month = dec, title = {DataComp-LM: In search of the next generation of training sets for language models}, url = {https://proceedings.neurips.cc/paper_files/paper/2024/hash/19e4ea30dded58259665db375885e412-Abstract-Datasets_and_Benchmarks_Track.html}, year = {2024} } -

One Thousand and One Pairs: A "novel" challenge for long-context language modelsMarzena Karpinska, Katherine Thai, Kyle Lo, Tanya Goyal, and Mohit IyyerIn EMNLP, Nov 2024

One Thousand and One Pairs: A "novel" challenge for long-context language modelsMarzena Karpinska, Katherine Thai, Kyle Lo, Tanya Goyal, and Mohit IyyerIn EMNLP, Nov 2024Synthetic long-context LLM benchmarks (e.g., "needle-in-the-haystack") test only surface-level retrieval capabilities, but how well can long-context LLMs retrieve, synthesize, and reason over information across book-length inputs? We address this question by creating NoCha, a dataset of 1,001 minimally different pairs of true and false claims about 67 recently-published English fictional books, written by human readers of those books. In contrast to existing long-context benchmarks, our annotators confirm that the largest share of pairs in NoCha require global reasoning over the entire book to verify. Our experiments show that while human readers easily perform this task, it is enormously challenging for all ten long-context LLMs that we evaluate: no open-weight model performs above random chance (despite their strong performance on synthetic benchmarks), while GPT-4o achieves the highest accuracy at 55.8%. Further analysis reveals that (1) on average, models perform much better on pairs that require only sentence-level retrieval vs. global reasoning; (2) model-generated explanations for their decisions are often inaccurate even for correctly-labeled claims; and (3) models perform substantially worse on speculative fiction books that contain extensive world-building. The methodology proposed in NoCha allows for the evolution of the benchmark dataset and the easy analysis of future models.

@inproceedings{Karpinska2024OneTA, author = {Karpinska, Marzena and Thai, Katherine and Lo, Kyle and Goyal, Tanya and Iyyer, Mohit}, booktitle = {EMNLP}, doi = {10.18653/v1/2024.emnlp-main.948}, month = nov, title = {One Thousand and One Pairs: A "novel" challenge for long-context language models}, url = {https://aclanthology.org/2024.emnlp-main.948}, year = {2024} } -

SciRIFF: A Resource to Enhance Language Model Instruction-Following over Scientific LiteratureDavid Wadden, Kejian Shi, Jacob Daniel Morrison, Aakanksha Naik, Shruti Singh, Nitzan Barzilay, Kyle Lo, and 6 more authorsArXiv, Jun 2024

SciRIFF: A Resource to Enhance Language Model Instruction-Following over Scientific LiteratureDavid Wadden, Kejian Shi, Jacob Daniel Morrison, Aakanksha Naik, Shruti Singh, Nitzan Barzilay, Kyle Lo, and 6 more authorsArXiv, Jun 2024We present SciRIFF (Scientific Resource for Instruction-Following and Finetuning), a dataset of 137K instruction-following demonstrations for 54 tasks covering five essential scientific literature understanding capabilities: information extraction, summarization, question answering, claim verification, and classification. SciRIFF demonstrations are notable for their long input contexts, detailed task specifications, and complex structured outputs. While instruction-following resources are available in specific domains such as clinical medicine and chemistry, SciRIFF is the first dataset focused on extracting and synthesizing information from research literature across a wide range of scientific fields. To demonstrate the utility of SciRIFF, we develop a sample-efficient strategy to adapt a general instruction-following model for science by performing additional finetuning on a mix of general-domain and SciRIFF demonstrations. In evaluations on nine held-out scientific tasks, our model – called SciTulu – improves over a strong LLM baseline by 28.1% and 6.5% at the 7B and 70B scales respectively, while maintaining general instruction-following performance within 2% of the baseline. We are optimistic that SciRIFF will facilitate the development and evaluation of LLMs to help researchers navigate the ever-growing body of scientific literature. We release our dataset, model checkpoints, and data processing and evaluation code to enable further research.

@article{Wadden2024SciRIFFAR, author = {Wadden, David and Shi, Kejian and Morrison, Jacob Daniel and Naik, Aakanksha and Singh, Shruti and Barzilay, Nitzan and Lo, Kyle and Hope, Tom and Soldaini, Luca and Shen, Shannon Zejiang and Downey, Doug and Hajishirzi, Hanna and Cohan, Arman}, journal = {ArXiv}, month = jun, title = {SciRIFF: A Resource to Enhance Language Model Instruction-Following over Scientific Literature}, url = {https://arxiv.org/abs/2406.07835}, year = {2024} } -

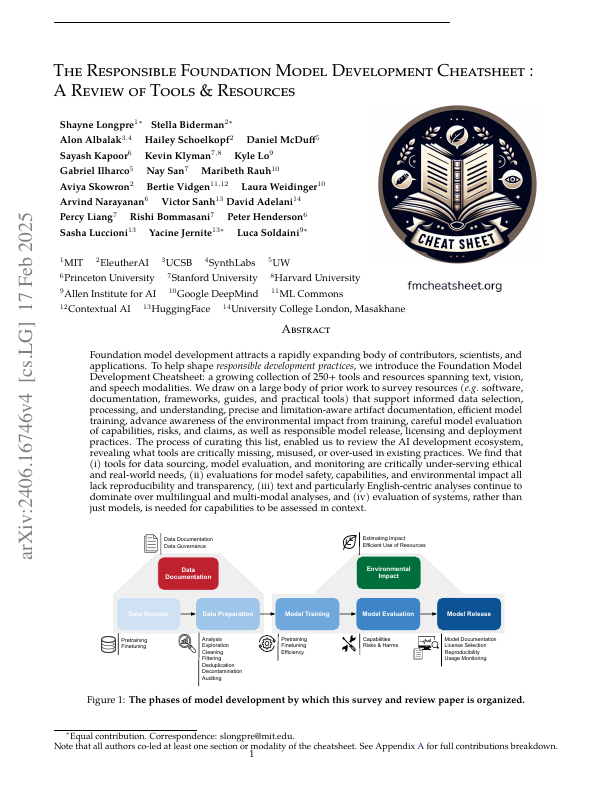

The responsible foundation model development cheatsheet: A review of tools & resourcesShayne Longpre, Stella Biderman, Alon Albalak, Hailey Schoelkopf, Daniel McDuff, Sayash Kapoor, Kevin Klyman, and 16 more authorsArXiv, Jun 2024

The responsible foundation model development cheatsheet: A review of tools & resourcesShayne Longpre, Stella Biderman, Alon Albalak, Hailey Schoelkopf, Daniel McDuff, Sayash Kapoor, Kevin Klyman, and 16 more authorsArXiv, Jun 2024Foundation model development attracts a rapidly expanding body of contributors, scientists, and applications. To help shape responsible development practices, we introduce the Foundation Model Development Cheatsheet: a growing collection of 250+ tools and resources spanning text, vision, and speech modalities. We draw on a large body of prior work to survey resources (e.g. software, documentation, frameworks, guides, and practical tools) that support informed data selection, processing, and understanding, precise and limitation-aware artifact documentation, efficient model training, advance awareness of the environmental impact from training, careful model evaluation of capabilities, risks, and claims, as well as responsible model release, licensing and deployment practices. We hope this curated collection of resources helps guide more responsible development. The process of curating this list, enabled us to review the AI development ecosystem, revealing what tools are critically missing, misused, or over-used in existing practices. We find that (i) tools for data sourcing, model evaluation, and monitoring are critically under-serving ethical and real-world needs, (ii) evaluations for model safety, capabilities, and environmental impact all lack reproducibility and transparency, (iii) text and particularly English-centric analyses continue to dominate over multilingual and multi-modal analyses, and (iv) evaluation of systems, rather than just models, is needed so that capabilities and impact are assessed in context.

@article{Longpre2024ResponsibleFoundation, author = {Longpre, Shayne and Biderman, Stella and Albalak, Alon and Schoelkopf, Hailey and McDuff, Daniel and Kapoor, Sayash and Klyman, Kevin and Lo, Kyle and Ilharco, Gabriel and San, Nay and Rauh, Maribeth and Skowron, Aviya and Vidgen, Bertie and Weidinger, Laura and Narayanan, Arvind and Sanh, Victor and Adelani, David and Liang, Percy and Bommasani, Rishi and Henderson, Peter and Luccioni, Sasha and Jernite, Yacine and Soldaini, Luca}, journal = {ArXiv}, month = jun, title = {The responsible foundation model development cheatsheet: A review of tools & resources}, url = {https://arxiv.org/abs/2406.16746}, volume = {2406.16746}, year = {2024} } -

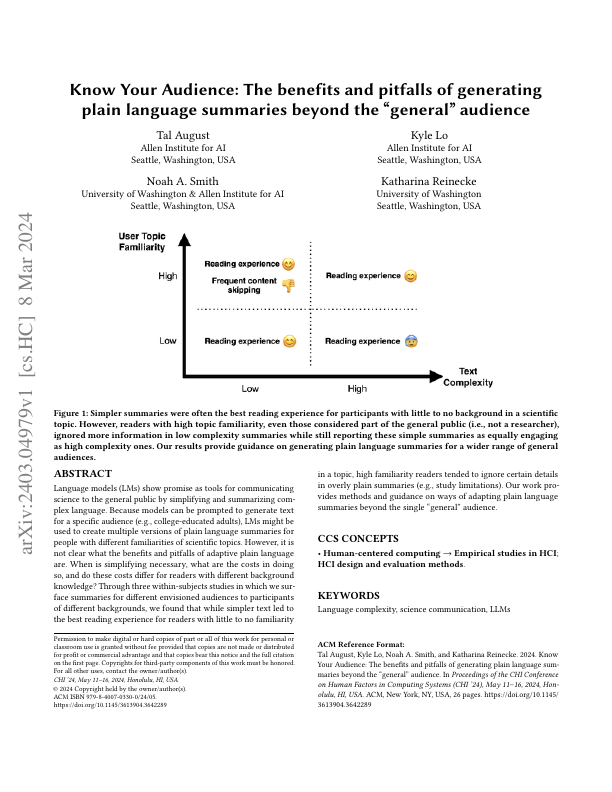

Know Your Audience: The benefits and pitfalls of generating plain language summaries beyond the "general" audienceTal August, Kyle Lo, Noah A. Smith, and Katharina ReineckeIn CHI, May 2024

Know Your Audience: The benefits and pitfalls of generating plain language summaries beyond the "general" audienceTal August, Kyle Lo, Noah A. Smith, and Katharina ReineckeIn CHI, May 2024Language models (LMs) show promise as tools for communicating science to the general public by simplifying and summarizing complex language. Because models can be prompted to generate text for a specific audience (e.g., college-educated adults), LMs might be used to create multiple versions of plain language summaries for people with different familiarities of scientific topics. However, it is not clear what the benefits and pitfalls of adaptive plain language are. When is simplifying necessary, what are the costs in doing so, and do these costs differ for readers with different background knowledge? Through three within-subjects studies in which we surface summaries for different envisioned audiences to participants of different backgrounds, we found that while simpler text led to the best reading experience for readers with little to no familiarity in a topic, high familiarity readers tended to ignore certain details in overly plain summaries (e.g., study limitations). Our work provides methods and guidance on ways of adapting plain language summaries beyond the single “general” audience.

@inproceedings{August2024KnowYourAudience, author = {August, Tal and Lo, Kyle and Smith, Noah A. and Reinecke, Katharina}, booktitle = {CHI}, doi = {10.1145/3613904.3642289}, month = may, title = {Know Your Audience: The benefits and pitfalls of generating plain language summaries beyond the "general" audience}, url = {https://doi.org/10.1145/3613904.3642289}, year = {2024} } -

Booookscore: A systematic exploration of book-length summarization in the era of llmsYapei Chang, Kyle Lo, Tanya Goyal, and Mohit IyyerIn ICLR, May 2024

Booookscore: A systematic exploration of book-length summarization in the era of llmsYapei Chang, Kyle Lo, Tanya Goyal, and Mohit IyyerIn ICLR, May 2024Summarizing book-length documents (100K tokens) that exceed the context window size of large language models (LLMs) requires first breaking the input document into smaller chunks and then prompting an LLM to merge, update, and compress chunk-level summaries. Despite the complexity and importance of this task, it has yet to be meaningfully studied due to the challenges of evaluation: existing book-length summarization datasets (e.g., BookSum) are in the pretraining data of most public LLMs, and existing evaluation methods struggle to capture errors made by modern LLM summarizers. In this paper, we present the first study of the coherence of LLM-based book-length summarizers implemented via two prompting workflows: (1) hierarchically merging chunk-level summaries, and (2) incrementally updating a running summary. We obtain 1193 fine-grained human annotations on GPT-4 generated summaries of 100 recently-published books and identify eight common types of coherence errors made by LLMs. Because human evaluation is expensive and time-consuming, we develop an automatic metric, BooookScore, that measures the proportion of sentences in a summary that do not contain any of the identified error types. BooookScore has high agreement with human annotations and allows us to systematically evaluate the impact of many other critical parameters (e.g., chunk size, base LLM) while saving $15K USD and 500 hours in human evaluation costs. We find that closed-source LLMs such as GPT-4 and Claude 2 produce summaries with higher BooookScore than those generated by open-source models. While LLaMA 2 falls behind other models, Mixtral achieves performance on par with GPT-3.5-Turbo. Incremental updating yields lower BooookScore but higher level of detail than hierarchical merging, a trade-off sometimes preferred by annotators. We release code and annotations to spur more principled research on book-length summarization.

@inproceedings{Chang2023BooookScoreAS, author = {Chang, Yapei and Lo, Kyle and Goyal, Tanya and Iyyer, Mohit}, booktitle = {ICLR}, month = may, title = {Booookscore: A systematic exploration of book-length summarization in the era of llms}, url = {https://openreview.net/forum?id=7Ttk3RzDeu}, year = {2024} } -

FollowIR: Evaluating and Teaching Information Retrieval Models to Follow InstructionsOrion Weller, Benjamin Chang, Sean MacAvaney, Kyle Lo, Arman Cohan, Benjamin Van Durme, Dawn Lawrie, and 1 more authorArXiv, May 2024

FollowIR: Evaluating and Teaching Information Retrieval Models to Follow InstructionsOrion Weller, Benjamin Chang, Sean MacAvaney, Kyle Lo, Arman Cohan, Benjamin Van Durme, Dawn Lawrie, and 1 more authorArXiv, May 2024Modern Language Models (LMs) are capable of following long and complex instructions that enable a large and diverse set of user requests. While Information Retrieval (IR) models use these LMs as the backbone of their architectures, virtually none of them allow users to provide detailed instructions alongside queries, thus limiting their ability to satisfy complex information needs. In this work, we study the use of instructions in IR systems. First, we introduce our dataset FollowIR, which contains a rigorous instruction evaluation benchmark as well as a training set for helping IR models learn to better follow real-world instructions. FollowIR repurposes detailed instructions – also known as narratives – developed for professional assessors to evaluate retrieval systems. In particular, we build our benchmark from three collections curated for shared tasks at the Text REtrieval Conference (TREC). These collections contains hundreds to thousands of labeled documents per query, making them suitable for our exploration. Through this process, we can measure how well IR models follow instructions, through a new pairwise evaluation framework. Our results indicate that existing retrieval models fail to correctly use instructions, using them for basic keywords and struggling to understand long-form information. However, we show that it is possible for IR models to learn to follow complex instructions: our new FollowIR-7B model has significant improvements after fine-tuning on our training set.